NVIDIA Unleashes Faster Inference Engine for LLMs: Revolutionizing AI Performance

In a groundbreaking move, NVIDIA has launched a cutting-edge inference engine designed to accelerate the performance of large language models (LLMs). As the demand for AI-driven applications continues to skyrocket, NVIDIA’s new inference engine aims to address the challenges posed by inference costs and significantly enhance the efficiency of language model deployment.

The AI race has witnessed a notable shift from training costs to inference costs as more organizations deploy language models to production. With the increasing complexity and scale of LLMs, inference costs can quickly balloon, posing a significant hurdle to the widespread adoption of these models.

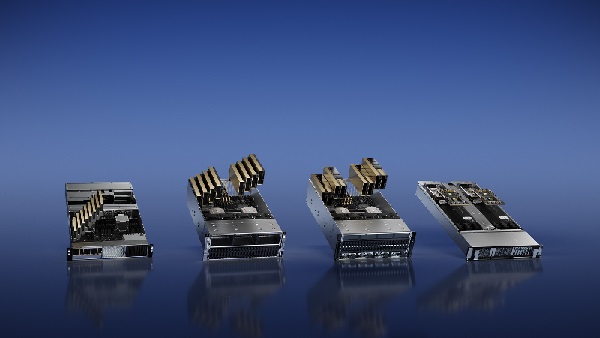

Enter NVIDIA’s game-changing solution: TensorRT, a widely acclaimed framework known for its exceptional speed in AI applications. NVIDIA has now introduced a specialized version of TensorRT tailored specifically for language models running on H100 GPUs. This development marks a significant leap forward in optimizing inference performance for LLMs.

By leveraging the power of NVIDIA’s new inference engine, organizations can unlock unprecedented levels of speed and efficiency in language model inference. This advancement has the potential to revolutionize a broad range of AI applications, from natural language understanding and sentiment analysis to chatbots and content generation.

The availability of a dedicated inference engine for language models on H100 GPUs brings immense benefits to developers and researchers alike. It enables them to harness the full capabilities of LLMs without compromising on performance or scalability. Organizations can now deploy highly sophisticated AI-driven language models, confident in their ability to handle demanding workloads with unmatched speed and accuracy.

NVIDIA’s efforts to deliver a faster inference engine for LLMs align with their commitment to advancing AI technology and making it more accessible to a wider audience. By addressing the critical challenge of inference costs, NVIDIA paves the way for increased adoption of LLMs in industries ranging from healthcare and finance to customer service and content creation.

As the AI landscape continues to evolve, innovations like NVIDIA’s inference engine propel the industry forward, empowering organizations to unlock the true potential of AI-driven language models. The deployment of LLMs can now be more cost-effective and efficient, opening doors to new possibilities and driving advancements in various sectors.

Get ready to dive into a world of AI news, reviews, and tips at Wicked Sciences! If you’ve been searching the internet for the latest insights on artificial intelligence, look no further. We understand that staying up to date with the ever-evolving field of AI can be a challenge, but Wicked Science is here to make it easier. Our website is packed with captivating articles and informative content that will keep you informed about the latest trends, breakthroughs, and applications in the world of AI. Whether you’re a seasoned AI enthusiast or just starting your journey, Wicked Science is your go-to destination for all things AI. Discover more by visiting our website today and unlock a world of fascinating AI knowledge.

Sources: