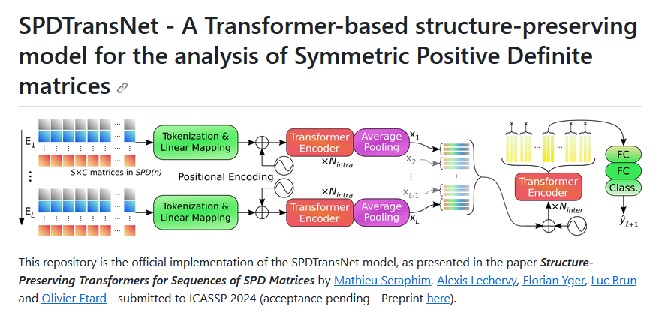

Transformers, renowned for their effectiveness in processing text and image data, have found a new application in the field of sleep analysis. Researchers have developed SPDTransNet, a modified version of Transformers that harnesses the power of EEG data to gain deeper insights into sleep stages. This innovative project represents a significant advancement in understanding and analyzing sleep patterns.

The SPDTransNet model is specifically designed to adapt Transformers for analyzing timeseries of Symmetric Positive Definite (SPD) matrices derived from electroencephalogram (EEG) data. By incorporating SPD matrices into the Transformer framework, researchers can capture the functional connectivity of EEG signals, leading to a more comprehensive understanding of sleep stages.

The utilization of SPD matrices enables SPDTransNet to analyze EEG-derived functional connectivity without compromising accuracy. This approach preserves the structural properties of the matrices while leveraging the vast capabilities of Transformer models. The resulting analysis provides valuable insights into sleep staging and further enhances the field of sleep analysis.

The research paper “SPDTransNet: Transformers for Sleep Analysis” by Mathieu Seraphim et al. presents the SPDTransNet model and its application for sleep stage classification. The authors propose a Transformer-based approach to analyze EEG-derived covariance matrices, achieving high levels of accuracy in automatic sleep staging.

The adoption of SPDTransNet and similar models holds promise for advancing sleep analysis techniques. By leveraging the power of Transformers, these models can process EEG data more efficiently and accurately, enhancing our understanding of sleep patterns and related disorders.

Furthermore, research in this domain has explored the integration of Bayesian relations and attention mechanisms within Transformer architectures for sleep staging. Bayesian Spatial-Temporal Transformers (BSTT) have been proposed as a novel model that integrates Bayesian relations and Transformers to improve sleep staging accuracy.

It is worth noting that SPDTransNet is not the only model exploring the potential of Transformers in sleep analysis. MultiChannelSleepNet, another Transformer encoder-based model, has been developed for automatic sleep stage classification using multichannel PSG (polysomnography) data. This demonstrates the versatility and adaptability of Transformer models in various sleep analysis scenarios.

The application of Transformers in sleep analysis is a relatively new but rapidly evolving field. As researchers continue to explore and refine these models, we can expect further advancements that will revolutionize sleep research and improve diagnostics and treatment for sleep-related disorders.

The utilization of SPDTransNet and similar Transformer models in sleep analysis represents an exciting development in the field. By adapting Transformers to work with EEG-derived SPD matrices, researchers can delve deeper into sleep stages and gain valuable insights into sleep patterns. The continuous evolution of transformer-based models holds immense potential for enhancing sleep analysis techniques, leading to improved diagnostics and personalized treatment approaches for sleep-related disorders.

Sources:

Get ready to dive into a world of AI news, reviews, and tips at Wicked Sciences! If you’ve been searching the internet for the latest insights on artificial intelligence, look no further. We understand that staying up to date with the ever-evolving field of AI can be a challenge, but Wicked Science is here to make it easier. Our website is packed with captivating articles and informative content that will keep you informed about the latest trends, breakthroughs, and applications in the world of AI. Whether you’re a seasoned AI enthusiast or just starting your journey, Wicked Science is your go-to destination for all things AI. Discover more by visiting our website today and unlock a world of fascinating AI knowledge.