Google’s recent reveal of the Gemini 1.5 AI model has sparked significant interest in the tech community. With promises of enhanced performance and an array of new features, this iteration builds upon its predecessor’s successes.

The incorporation of the MoE architecture and improved processing capabilities hint at a potential game-changer in the AI landscape. As industry experts delve into the implications of these advancements, the question arises: how will Google’s Gemini 1.5 influence the future of artificial intelligence and its applications?

Key Takeaways

- Gemini 1.5 Pro processes vast data efficiently.

- Safety evaluations ensure responsible deployment.

- Future plans include wider accessibility with varied pricing.

- Gemini 1.5 showcases impressive performance across diverse applications.

Key Features of Gemini 1.5

With its upgraded capabilities and enhanced performance, Gemini 1.5 introduces a new era of AI modeling through its advanced features. The latest version, built on a new Mixture-of-Experts (MoE) architecture, boasts a longer context window compared to its predecessor, Gemini 1.0.

Gemini 1.5 achieves comparable quality to 1.0 Ultra while requiring less computational power, offering developers and applications new possibilities. Notably, Gemini 1.5 Pro can efficiently process up to one million tokens in production, handling vast amounts of information in one go.

Outperforming its predecessor in 87% of benchmarks, Gemini 1.5 shines in evaluations like Needle In A Haystack (NIAH) and Machine Translation from One Book (MTOB), demonstrating its remarkable performance and potential in the AI modeling landscape.

Enhanced Understanding and Performance

Gemini 1.5’s advancements in understanding and performance mark a significant leap in AI modeling capabilities. This is exemplified by its enhanced features and superior processing efficiency. The upgraded model surpasses its predecessor by offering a longer context window, enabling it to process up to one million tokens in production.

This advancement allows Gemini 1.5 to handle vast amounts of information in one sitting, including processing 1 hour of video, 11 hours of audio, and large codebases. Notably, Gemini 1.5 Pro outperformed its predecessor in 87% of benchmarks and demonstrated stellar performance in evaluations such as Needle In A Haystack (NIAH) and Machine Translation from One Book (MTOB). These achievements showcase its robust understanding and improved processing capabilities.

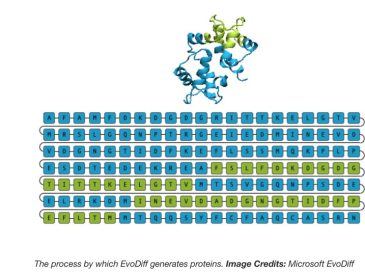

New MoE Architecture Benefits

The integration of the new Mixture-of-Experts (MoE) architecture in Gemini 1.5 brings forth significant advantages in AI model performance and capabilities. This updated architecture allows for enhanced parallel processing, enabling the AI model to analyze and interpret complex data more efficiently.

With the MoE architecture, Gemini 1.5 can leverage a diverse set of expert models to handle different aspects of a task, leading to improved accuracy and faster decision-making. The modular structure of MoE also enhances scalability, allowing the model to adapt to varying workloads and input types seamlessly.

Performance Comparison With 1.0 Ultra

In direct evaluations against the previous iteration, Gemini 1.5 showcased superior performance metrics compared to 1.0 Ultra. The upgraded model demonstrated enhanced understanding and overall performance, thanks to its longer context window and new version of the Mixture-of-Experts architecture.

Gemini 1.5 achieved comparable quality to 1.0 Ultra but with less compute, enabling new capabilities for developers and applications. Notably, it outperformed 1.0 Pro in 87% of benchmarks, excelling in tasks like Needle In A Haystack (NIAH) and Machine Translation from One Book (MTOB) evaluations.

These results highlight the significant advancements made in the latest iteration, solidifying Gemini 1.5 as a powerful AI model for various applications.

Impressive Processing Capabilities

With remarkable processing prowess, Gemini 1.5’s capabilities extend to handling vast amounts of information across various media formats efficiently. This upgraded version excels in processing up to one million tokens in production scenarios, allowing for the seamless handling of extensive data in one go.

Noteworthy is its ability to process 1 hour of video content, 11 hours of audio recordings, and large codebases, showcasing its versatility across different media types. Gemini 1.5 Pro outperformed its predecessor in 87% of benchmarks, demonstrating its superior processing capabilities.

Its stellar performance in evaluations like Needle In A Haystack (NIAH) and Machine Translation from One Book (MTOB) further underscores its efficiency in handling diverse information types with precision and speed.

Safety and Responsible Deployment

Ensuring the safe and responsible deployment of Gemini 1.5 is paramount in the advancement of AI technology. Google has conducted extensive evaluations to guarantee the safe deployment of this enhanced AI model, providing reassurance to users.

A limited preview of Gemini 1.5 Pro with a one million token context window has been made available to developers and enterprise customers. The release of Gemini 1.5 via AI Studio and Vertex AI initially at no cost reflects a commitment to responsible deployment.

Future plans include introducing pricing tiers with different token context windows to cater to diverse needs, ultimately aiming to make Gemini 1.5 accessible to a wider user base while upholding safety and responsibility in AI innovation.

Accessibility and Future Pricing

The forthcoming pricing structure for Gemini 1.5 will introduce diversified token context windows to cater to a broader range of user requirements, ensuring enhanced accessibility and utility of the AI model.

This approach aims to make Gemini 1.5 more inclusive and adaptable to different user needs, from individual developers to large enterprise customers. By offering various token context window options, Google is paving the way for a more flexible pricing model that aligns with the diverse demands of users across industries.

This strategy not only enhances the accessibility of Gemini 1.5 but also underscores Google’s commitment to providing a scalable and cost-effective solution for leveraging advanced AI capabilities.

Versatile Applications and Use Cases

Google’s Gemini 1.5 AI model showcases remarkable adaptability across a myriad of applications and use cases. Its demonstrated ability to process a 44-minute silent movie and effectively handle multimodal queries highlights its versatility.

The model’s impressive performance against benchmarks and efficient handling of diverse information types make it suitable for a wide range of applications and industries. From answering questions based on video content to processing large codebases, Gemini 1.5 proves its capability to tackle various tasks efficiently.

This adaptability opens doors for innovative solutions in fields such as content analysis, customer service automation, and data processing, positioning Gemini 1.5 as a valuable tool for diverse business needs.

Future Innovations and User Engagement

Gemini 1.5’s trajectory in the realm of AI models signals a forward-looking approach towards future innovations and enhanced user engagement. Google’s commitment to advancing AI technology is evident through the development of Gemini 1.5, which not only offers upgraded features and performance enhancements but also aims to make its capabilities accessible to a broader user base.

With plans for wider releases of the 1.5 Pro version featuring different pricing tiers based on token context windows, Google is striving to cater to varied user needs. By enabling new capabilities for developers and applications, Gemini 1.5 sets the stage for increased user engagement and adoption, paving the way for a more inclusive and dynamic AI landscape.

Frequently Asked Questions

How Does Gemini 1.5 Pro Compare to Other AI Models in Terms of Processing Efficiency and Performance?

In comparison to other AI models, Gemini 1.5 Pro excels in processing efficiency and performance. With an upgraded architecture and enhanced capabilities, it outperforms in various benchmarks, showcasing superior efficiency and performance across different tasks.

What Specific Measures Have Been Taken to Ensure the Safe Deployment of Gemini 1.5 Pro?

To ensure the safe deployment of Gemini 1.5 Pro, extensive evaluations have been conducted, providing reassurance to users. A limited preview with a one million token context window is available to developers and enterprise customers. Plans for pricing tiers aim to make the model accessible to a wider audience.

Can Gemini 1.5 Pro Be Integrated With Existing AI Frameworks and Tools for Seamless Deployment in Various Industries?

Gemini 1.5 Pro can seamlessly integrate with existing AI frameworks and tools, facilitating its deployment across various industries. Its enhanced capabilities, longer context window, and improved understanding make it a valuable addition to diverse applications.

Are There Any Plans to Introduce Additional Features or Capabilities to Gemini 1.5 Pro in Future Updates?

There are ongoing plans to introduce additional features and capabilities to Gemini 1.5 Pro in future updates. These enhancements aim to further improve performance, expand functionalities, and cater to evolving user needs and industry requirements.

How Does Gemini 1.5 Pro Handle Privacy and Data Security Concerns, Especially When Processing Large Amounts of Information in One Sitting?

Gemini 1.5 Pro addresses privacy and data security concerns by implementing robust measures such as encryption, access controls, and anonymization techniques. These safeguards ensure secure processing of large volumes of information, safeguarding user data and privacy.

Get ready to dive into a world of AI news, reviews, and tips at Wicked Sciences! If you’ve been searching the internet for the latest insights on artificial intelligence, look no further. We understand that staying up to date with the ever-evolving field of AI can be a challenge, but Wicked Science is here to make it easier. Our website is packed with captivating articles and informative content that will keep you informed about the latest trends, breakthroughs, and applications in the world of AI. Whether you’re a seasoned AI enthusiast or just starting your journey, Wicked Science is your go-to destination for all things AI. Discover more by visiting our website today and unlock a world of fascinating AI knowledge.